Description

Evaluation plans describe how to measure the impacts of your program and the effectiveness of your process for delivering residential energy efficiency products and services. The Evaluation & Data Collection component provides detailed information about the purpose and content of different types of evaluation plans. The Evaluation & Data Collection component describes two types of evaluations that are relevant to this handbook:

- Impact evaluations are evaluations of program-specific changes (e.g., changes in energy and/or demand use), directly or indirectly induced, associated with an energy efficiency program.

- Process evaluations are systematic assessments of an energy efficiency program for the purpose of documenting program operations at the time of the examination, and identifying and recommending improvements to increase the program’s efficiency or effectiveness while maintaining high levels of participant satisfaction.

This handbook focuses on developing program design and customer experience metrics and measurement strategies that will be useful for impact and process evaluations. They are:

- Program metrics, which measure the extent to which your program is achieving its goals and objectives. Program metrics will be primarily useful for impact evaluations (i.e., what impact are we having?), but tracking progress toward goals may also be useful for process evaluation (i.e., how can we enhance our impact?)

- Customer experience metrics, which measure how well you have integrated all of your program components to provide customers with a satisfying experience and run an efficient and effective operation. Customer experience metrics will be primarily useful for process evaluations.

These metrics and measurement strategies will contribute to the development of your program’s evaluation plans and will also help you assess and improve your program over time.

Measuring the effectiveness of your program design and the quality of your customer’s experience involves:

- Metrics, which are quantitative expressions of program outcomes or activities (e.g., number of homes upgraded, percent energy savings).

- Measurement strategies, which are the actions taken to collect data for your metrics. These strategies describe what will be collected, who will collect it, and with what frequency.

- A systematic process for information sharing and review to periodically examine the metrics and assess your program delivery process and customer satisfaction.

Handbooks for Marketing and Outreach, Financing, and Contractor Engagement & Workforce Development all describe a similar process of developing metrics, measuring them, and developing a plan for sharing and reviewing data to evaluate each of these specific program components.

Your approach to measuring the effectiveness of your program will be partly based on the amount of funds allocated to evaluation in your program’s budget. Many programs have found evaluation to be an essential program management function. In addition to providing insights to help program managers make informed decisions, evaluation data may also be necessary to demonstrate to funders or regulators that you are achieving your goals.

Key steps are:

- Establish program metrics that reflect your goals and objectives

- Establish direct and indirect customer experience metrics

- Document measurement strategies for collecting data

- Establish responsibilities for internal review of evaluation results.

Step by Step

Establishing program metrics and measurement strategies involves several steps.

Develop plan for evaluating customer experience and overall program delivery

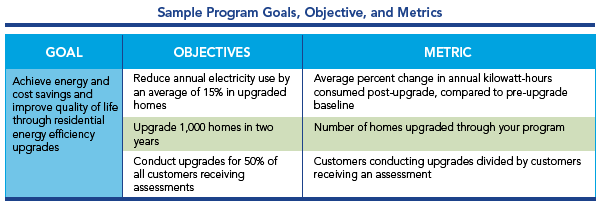

To develop metrics, start with your program goals and objectives. Each of your program objectives should link to an overall program goal and include a specific measurable target and timeline. Metrics will help you measure whether you are meeting your objectives. The table below illustrates the relationship between objectives and metrics.

Metrics to assess progress toward your program goals may include:

- Activity metrics. These include the number of assessments, upgrades, loans, rebates, or other program activities.

- Participation metrics. These include the number or percent of homes in an area that are participating in the program, have been assessed, have been upgraded, and have achieved other program milestones. Participation metrics also include conversion rates, which measure how many homeowners are progressing through all steps in the upgrade process (e.g., the percentage of customers that have undertaken an upgrade, out of the total number receiving an assessment).

- Energy and demand savings attributed to the program. Energy and demand savings can be estimated using measured energy consumption before and after upgrades are completed (e.g., billing analysis), through simulation modeling, or deemed savings estimates. Savings targets can be expressed on an annual or lifetime basis.

- Cost savings for customers. Cost savings are typically estimated based on calculations of energy savings and energy prices.

- Other outcomes, such as gallons of water conserved, greenhouse gas emissions reduced, etc. Measurement of these outcomes is often based on estimates.

Benchmarking for Success

To effectively assess your program’s progress toward your goals and objectives, it is important to measure progress against clear benchmarks. The U.S. Department of Energy’s “Guide for Benchmarking Residential Energy Efficiency Program Progress with Examples” can help you identify metrics to measure goals, collect information on progress, and analyze and share results. Learn how programs have used metrics to effectively engage customers and achieve savings, and how to use a step-by-step action plan to develop your program’s benchmarking strategy. The guide provides 26 recommended benchmarking impact indicators that you can use to assess your total impact. By using metrics to visibly identify program performance relative to your goals, you can better enable yourself to make adjustments and improve your program design.

Calculating Energy Savings

Energy savings are one of the most critical program metrics to be tracked, reported, and ultimately evaluated. The methods you use to estimate savings resulting from your program will depend on your program design, measures promoted, and—for some programs—regulatory requirements. In some cases, you may need to use multiple approaches. Most programs initially measure savings based on deemed savings estimates or energy modeling and then verify these savings later using analysis of energy bills, most often based on a full year of billing after upgrades are completed. These three methods—deemed savings, energy modeling, and billing analysis—are described below.

Deemed savings are estimated energy savings from specific measures (e.g., insulation, duct sealing, etc.) based on historical evaluations of actual savings from measure installation. (Deemed savings are generally only calculated for measures with well–known and consistent performance characteristics). Deemed savings estimates can be derived from a number of sources, including past program evaluations, engineering estimates, and extrapolated results from other programs. The advantage of deemed savings is that they are straightforward to use and don’t require actual energy use monitoring. The disadvantages are that extrapolated savings may not be accurate for different climate zones or buildings, methodologies used to calculate deemed savings can differ among organizations calculating them, and they rarely account for the combined effects of multiple measures. The U.S. Department of Energy’s Uniform Methods Project is developing a framework and a set of protocols for consistent methodologies to determine the energy savings from specific energy efficiency measures and programs.

Utilities that make extensive use of deemed savings often document them in technical reference manuals. For example, utility energy efficiency program administrators in Massachusetts have developed a manual that describes how deemed savings will be calculated for energy efficiency programs in the state. It includes algorithms, default assumptions, and other information for calculating deemed savings from a variety of energy efficiency measures.

Energy modeling is often used for measures with savings that cannot be estimated with a single deemed value. These savings estimates may depend on a number of project-specific factors. For example, ceiling insulation savings will vary based on the R-value of insulation before and after an upgrade, the treated area, and the number of heating degree days. Some programs use home assessment software tools that have such algorithms embedded in them. Energy modeling software allows programs to estimate savings associated with single or multiple recommended measures.

Billing analysis provides estimates of savings based on statistical analysis of large datasets of participant and control groups. Typically a year or more of program participation data are needed before such analyses can be done. Results from billing analyses are often used to correct prior savings estimates derived from one of the two approaches above and/or to inform prospective deemed savings estimates. The Uniform Methods Project recommends billing analysis as the preferred approach for whole building residential energy efficiency programs.

EnergySmart Tracks Program Metrics

Boulder County’s EnergySmart program established a customer relationship management system to track and display customer information, including deemed savings from upgrades. Among other metrics, it allowed the program to actively monitor overall program accomplishments and key activities:

- Total energy savings from all projects

- Total greenhouse gas reductions from projects

- Number of rebates awarded

- Cumulative number of upgrades

- Cumulative number of homes enrolled in the program

- The types of services provided to homeowners (e.g., energy advisor, assessment, upgrades)

- Customer motivations for participating in the program.

For more on EnergySmart’s approach, see the program’s presentation on using data to monitor its projects and impacts.

You will need to identify metrics for goals and objectives related to specific program components, such as marketing and outreach, financing, and contractor engagement & workforce development to track the progress made by those parts of your program.

Measuring Cost-Effectiveness

You may need to establish metrics to demonstrate whether your program is meeting regulatory cost-effectiveness tests. Understanding these tests is important if your program is run by a utility, you partner closely with utilities in delivering a program, or you are otherwise impacted by state public utility commission regulation. If your program is not deemed cost-effective, you may not get funding unless you change your program design to deliver more energy savings at lower cost.

Key metrics used to assess cost-effectiveness include:

- Benefit/cost ratio

- Net benefits

- Total benefits

- Total cost.

These metrics can be measured using one or more of several cost-effectiveness tests:

- Participant cost test (PCT)

- Utility/program administrator cost test (PACT)

- Ratepayer impact measure test (RIM)

- Total resource cost test (TRC)

- Societal cost test (SCT).

Depending on the regulatory jurisdiction, application of cost-effectiveness metrics, particularly benefit/cost ratios, may need to be applied at different levels of program engagement, such as:

- Measure

- Program

- Sector

- Portfolio.

The cost-effectiveness targets may also vary depending on the level of program engagement. For example, while there may be a target benefit/cost ratio of 1.0 or greater at the portfolio and program level, not all jurisdictions require that individual measures be cost-effective—i.e., the benefit/cost ratio may be less than 1.0.

For more information on cost-effectiveness tests, see the Evaluation & Data Collection handbook, Develop Evaluation Plans. The Existing Homes Program Guide by the Consortium for Energy Efficiency is another helpful resource.

Like program metrics, customer experience metrics are an important way to understand if your overall program design is effectively delivering services to your customers. No residential energy efficiency program will achieve its energy savings and other goals if customers are not satisfied with the program (e.g., ease of participating in the program, responsiveness of program staff and contractors, value of program services). Customer experience metrics are also a valuable diagnostic tool for understanding where problems are developing and identifying ways to improve program design and delivery.

Direct Customer Satisfaction Metrics

Often based on phone or email surveys at the end of the upgrade process, direct customer satisfaction metrics capture overall satisfaction with a program (e.g., the number of customers rating a program as “high” or “very high” on a scale from very low to very high) as well as satisfaction with particular components (e.g., assessment or upgrade quality and value, contractor timeliness, program communications).

Templates and Examples of Customer Surveys

Customer satisfaction metrics can be developed from responses customer surveys—either using the response from a single question or “rolling up” responses to multiple questions into a single satisfaction score. The following sample customer surveys and survey questions were developed by the Better Buildings Neighborhood Program:

- Sample email survey template for successful program participants

- Research questions and survey questions for successful, drop-out and screened-out program participants.

- Sample phone survey template for energy efficiency program drop-outs

- Sample phone survey for applicants who have been screened out from participating in a residential energy efficiency program.

The following homeowner survey examples were developed by state and local residential energy efficiency programs:

- EnergySmart: Survey about a homeowner's experience with the program

- Minnesota Community Energy Services Survey for homeowners about the program

- Wisconsin Energy Conservation Corporation:

Indirect Customer Satisfaction Metrics

Indirect metrics help programs understand customer satisfaction indirectly, often with metrics that suggest sustained or increasing demand for program services. Examples include:

- The number of customers who refer the program to others

- The number of new customers who heard of the program from neighbors

- The number of customers who return for additional services.

Data for these types of metrics can be collected through surveys at the beginning of the upgrade process. Programs can also use website analytics to look for patterns that suggest increased demand for the program based on customers seeking certain types of information on a program website (e.g., information about upgrade contractors).

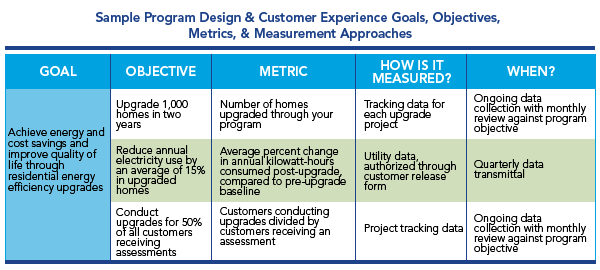

Once you have identified metrics, document how they will be measured. The table below, drawn from the Better Buildings Neighborhood Program’s “Creating an Evaluation Plan” worksheet illustrates a simple way to document measurement strategies for program metrics linked to program goals. Although the presentation in the table is simple, some of the methods for measuring metrics can be quite complex. The Evaluation & Data Collection Develop Resources handbook provides a detailed description of types of evaluation metric data and how to collect and assess them.

Two other parts of the strategy that you need to identify include:

- Who will provide or collect the data (e.g., program staff, contractors, financial partners)?

- What quality assurance checks on the data will be required and how they will be conducted? For example, data can be automatically or manually checked against a range of expected values to check for outlier data points.

Once measurement strategies have been identified, program staff, contractors, and others responsible for collecting data should be trained on what data will be collected, at what frequency, and how to report it.

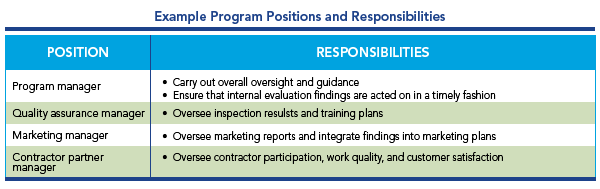

As part of evaluation planning, programs should establish responsibilities and schedules for review of evaluation results. Because evaluation is critically important, someone needs to take responsibility for it—otherwise, it is much less likely to receive the attention it needs. Key responsibilities are:

- Periodically check program metrics against overall goals and objectives

- Understand the level and trends in program activity

- Monitor customer satisfaction as an indicator of the overall health of the program

- Identify successes or challenges that can be used to assess and improve the program.

An example of program positions and responsibilities is shown in the table below.

The process of internal review and decision-making to respond to evaluation results is discussed further in the handbook on assessing and improving programs and in the Evaluation and Data Collection handbook on communicating program impacts.

Tips for Success

In recent years, hundreds of communities have been working to promote home energy upgrades through programs such as the Better Buildings Neighborhood Program, Home Performance with ENERGY STAR, utility-sponsored programs, and others. The following tips present the top lessons these programs want to share related to this handbook. This list is not exhaustive.

Many program administrators have found that launching and scaling up a program often takes longer than planned for, especially when forming partnerships with contractors and lenders. New energy efficiency programs often need at least 2-3 years to launch and become fully operational. Across programs, the amount of time it takes to get to full operations depends on many factors, including the number of qualified contractors working in the area, the availability of funding, the level of stakeholder and partner support that is available, the program’s goals and strategies, and the presence of unique program features that may take time to develop, such as community workforce agreements or loan products. Many program administrators found it helpful to set realistic expectations internally—and with key partners and stakeholders—about how long it takes to get programs fully up and running. And, they suggest celebrating and communicating achievements along the way.

- emPowerSBC in Santa Barbara, California, found that launching its program and scaling up took more time than expected. The launch of the program was delayed more than a year as the program modified its financing strategy from one that relied on residential PACE to one focused on a loan-loss reserve. Following the launch, hiring delays kept the program from being fully staffed for around six months. Contractors working with the program reported that it took three to twelve months for a lead to turn into a signed contract for upgrade services because homeowners took their time deciding whether to invest in energy efficiency.

- The Virginia State Energy Program (SEP) found that it was difficult for its three programs around the state—the Local Energy Alliance Program (LEAP), the Richmond Region Energy Alliance, and Community Alliance for Energy Efficiency (Cafe2)—to meet their upgrade targets in three years because the home performance industry in the state was still developing when the programs were initiated. These in-state programs started with little to no infrastructure in place and had to address barriers such as lack of qualified contractors before they could even begin offering home energy upgrades. For example, the programs found that contractors were reluctant to modify their business models and agree to undertake the paperwork and data collection the programs required. Over time, the programs developed strategies to work more effectively with contractors, such as holding monthly contractor meetings (in the case of LEAP) and establishing written Memoranda of Understanding with contractors to clarify mutual expectations (in the case of Cafe2). Virginia SEP advised that programs’ goals and timelines should reflect the starting conditions and the work that needs to be accomplished in order to achieve program goals.

- Enhabit, formerly Clean Energy Works Oregon, began with modest goals for a pilot project in Portland and then ramped up its ambitions as it expanded statewide. The goals for the program’s pilot project were to upgrade 500 homes in Portland, build a qualified workforce, and test its approach to service delivery. After the pilot, the program expanded to all of Oregon and upgraded over 3,000 homes around the state in three years.

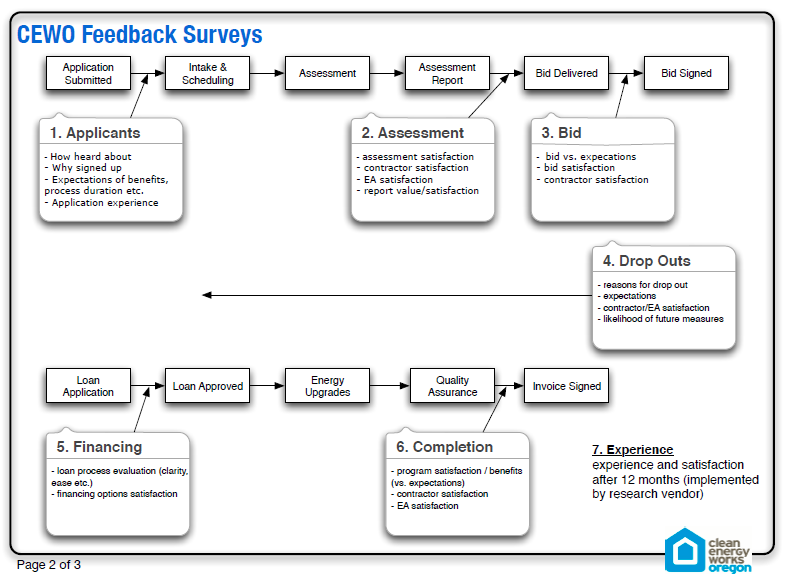

Measuring performance at key points in the upgrade process (e.g., assessments, conversion rates, and financing applications) has helped programs understand where their processes are working smoothly and where they are not. This information has helped them continuously improve their program design and implementation. To monitor progress, successful programs have combined information from their project tracking systems with customer surveys, information from call centers, and feedback from contractors and lenders to understand the customer experience. Make data accessible for program staff to track progress, identify successful strategies, and detect points of failure.

- Enhabit, formerly Clean Energy Works Oregon, established an extensive process for getting customer feedback at key points in the program delivery process to evaluate customer satisfaction and better understand why some homeowners chose to undertake upgrades while others did not. The program identified seven points in the program delivery process to gather information through feedback surveys and phone interviews: application, assessment, bid, drop-out, financing, completion, and experience after 12 months. The program credited this kind of customer communication and feedback as one of the keys to its ongoing success.

- Boulder County’s EnergySmart program sent an online customer feedback survey to homeowners who had completed upgrades. Among other things, the customer surveys affirmed customer satisfaction and identified the opportunity for word-of-mouth marketing. Surveys found that the vast majority of the respondents would recommend the EnergySmart service to a friend or neighbor. The surveys also surfaced some weaknesses that the program resolved. For example, some respondents noted contractor’s lack of response and professionalism as an issue, which led the program to develop guidelines for professionalism and customer contact. Surveys also noted that the assessment report was long and confusing, leading the program to develop a new, customized report that was easier to follow and clearer about next steps.

- Connecticut’s Neighbor to Neighbor Energy Challenge used qualitative contractor and customer feedback combined with quantitative data to evaluate how well its outreach efforts led to home energy assessments. When informal contractor feedback alerted program managers that relatively few interested customers were following through to have assessments conducted on their homes, the program analyzed project data and found that only around a quarter of customers who expressed interest in an assessment had completed one. To diagnose the problem, the program analyzed data to see how customers were acquired, how long it took to send leads to contractors, and how long it took contractors to follow up with customers to arrange for an assessment. Through qualitative analysis, the program found, among other things, that customers didn’t understand what they were signing up for and may have been unwilling to say “no” to young and enthusiastic outreach staff. The program also found that its staff wasn’t following up quickly enough with people that wanted more information. In response, the program improved its process for distributing leads to contractors (e.g., linking contractors to homeowners in 1-2 days), created a “receipt” for interested customers outlining next steps, and set up a system to call non-responsive leads after two weeks. With these and other steps, the program increased its close rate 35% in one month after changes were implemented.

Resources

The following resources provide topical information related to this handbook. The resources include a variety of information ranging from case studies and examples to presentations and webcasts. The U.S. Department of Energy does not endorse these materials.

This example home performance scorecard shows how a contractor compares to anonymized top and bottom scoring companies, based on their quality of measured installations, scope of work, customer satisfaction, and energy savings achieved.

Survey for Minnesota home owners participating in Community Energy Services pilot program about their experience at their home visit.

Survey for consultants participating in Green Madison and Me2 programs about their experiences with the programs.

Questionnaire for contractors participating in the Green Madison program about their overall experience, level of participation, training, and available resources.

Example Me2 and Green Madison process evaluation plan to conduct an in-depth investigation and assessment of the major program areas.

Survey for people who signed up to participate in the Me2 program for home performance assessments, but ultimately decided not to participate. The goal of the survey is to help improve services for future participants.

Participant survey sent to Me2 customers that have completed at least the initial Energy Advocate visit.

This report presents key findings and recommendations from the process evaluation of Clean Energy Works Oregon's (now Enhabit's) energy efficiency financing program. Table 1 provides a good list of key process evaluation research questions which may help others scope comprehensive process evaluations.

This report describes the process evaluation of a pilot project in Portland Oregon that informed the refinement and expansion of the program statewide into Clean Energy Works Oregon (now Enhabit).

EnergySmart Colorado uses surveys and a customer database to get feedback from homeowners that helps fine-tune program services and operations.

This presentation explains the importance of data collection and analysis for residential energy efficiency programs, common challenges related to data collection, and best practices for effective data collection and use.

Presentation describing how Conservation Services Group uses data to monitor market transformation and for internal QA/QC purposes.

This presentation describes steps programs can take to obtain useful feedback from customers regarding their programs.

This presentation covers the importance of collecting and evaluating program data, including data related to marketing efforts.

This paper describes the problems and issues that arise for energy efficiency programs as a result of common cost-effectiveness test implementation practice. It also provides recommendations for how to address these challenges.

This worksheet can help you organize your ideas and methods for creating an effective evaluation plan.

Sample phone survey template for program contractors.

This sample email survey template, created by the Better Buildings Neighborhood Program, was designed for programs to develop their own survey of successful program participants in order to assess customer experience.

This sample phone survey template, created by the Better Buildings Neighborhood Program, was designed for programs to use with applicants who have been screened out from participating in a program.

This sample phone survey template for program drop-outs, created by the Better Buildings Neighborhood Program, was designed for programs to find out why applicants that applied to participate in a program ultimately dropped out.

This document provides a menu of initial questions for a program administrator or implementer to build on and use in developing a real-time evaluation survey to collect qualitative data from contractors.

The Standard Energy Efficiency Data (SEED)™ Platform is a software application that helps organizations easily manage data on the energy performance of large groups of buildings. Users can combine data from multiple sources, clean and validate it, and share the information with others. The software application provides an easy, flexible, and cost-effective method to improve the quality and availability of data to help demonstrate the economic and environmental benefits of energy efficiency, to implement programs, and to target investment activity.

The U.S. Department of Energy's Better Buildings Residential Program released version 2.0 of a user-friendly tool for estimating the cost-effectiveness of a residential energy efficiency program based on program administrator inputs. Cost-effectiveness analysis compares the benefits (i.e., outputs or outcomes) associated with a program or a measure with the costs (i.e., resources expended) to produce them. Program cost-effectiveness is commonly used by public utility commissions to make decisions about funding programs or program approaches. Program designers, policy makers, utilities, architects, and engineers can use this tool to estimate the impact of different program changes on the cost-effectiveness of a program.

The State and Local Energy Efficiency Action Network (SEE Action) Evaluation, Measurement, and Verification (EM&V) Resource Portal serves as an EM&V resource one-stop shop for energy efficiency program administrators and project managers. The resources focus on tools and approaches that can be applied nationwide, address EM&V consistency, and are recognized by the industry.

The Building Energy Data Exchange Specification (BEDES, pronounced "beads" or /bi:ds/) is designed to support analysis of the measured energy performance of commercial, multifamily, and residential buildings, by providing a common data format, definitions, and an exchange protocol for building characteristics, efficiency measures, and energy use.

An Introduction to Measuring Energy Savings

This webinar covers evaluation, measurement and verification (EM&V) for energy efficiency programs and projects. In addition, it covers key areas such as savings estimates and how energy savings are measured.

Setting Baselines for Planning and Evaluation of Efficiency Programs

The key challenge with quantifying savings from end-use efficiency activities is the identification of an accurate baseline from which to determine the savings. Regardless of the protocol or procedure applied, all savings values are determined by estimating likely energy use in the absence of the program or project (the “counterfactual” scenario, or baseline). This webcast provides an introduction to considerations and common practices for defining baselines, the relationship between baselines and savings attribution, and examples of how different jurisdictions are addressing market baseline studies, setting baselines for retrofit measures, and market transformation program baselines.

Evaluation of Residential Behavior-Based Programs

Residential behavior-based (BB) programs use strategies grounded in the behavioral and social sciences to influence household energy use. These programs have unique evaluation challenges and usually require different evaluation methods than those currently employed for most other types of efficiency programs. This webcast provides an introduction to documenting the energy savings associated with BB programs and examples of how different jurisdictions are addressing BB program evaluation.

Developing an Evaluation Measurement and Verification Plan for Your Energy Efficiency Project/Program

This webcast discussed developing an Evaluation Measurement and Verification Plan for energy efficiency programs, including the collection of data and analysis of program performance.

Developing an Evaluation, Measurement and Verification Plan For Residential Retrofit Programs

EM&V Basics, Tools and Resources to Assist EECBG and SEP Grantees

This webinar offers an introduction to EM&V basics, including data collection, tracking tools, M&V approaches, and reporting energy savings.

This guide supports the development, maintenance, and use of accurate and reliable Technical Reference Manuals (TRMs). TRMs provide information to estimate the energy and demand savings of end-use energy efficiency measures associated with utility customer-funded efficiency programs. This guide describes existing TRMs in the United States and provides recommendations for TRM best practices. It also offers related background information on energy efficiency; evaluation, measurement, and verification; and TRM basics.

This report provides a comprehensive review of a wide range of problems and inconsistencies in current cost-effectiveness test practices, and recommends a range of best practices to address them.

This guide details and explains the five types of general program evaluations and provides guidance on selecting the type of evaluation suited to the program to be evaluated, given the type of information required and budget limitations. It is intended for use by managers of both deployment and R&D programs within the U.S. Department of Energy's Office of Energy Efficiency and Renewable Energy (EERE), although most of the examples of evaluations pertain to deployment programs.

This report provides guidance and recommendations to help residential energy efficiency programs to more accurately estimate energy savings. It identifies steps program managers can take to ensure precise savings estimates, apply impact estimates over time, and account for and avoid potential double counting of savings.

This guide provides background on the home improvement market in the U.S. and Canada and end users and systems in existing homes, as well as a description of energy efficiency program approaches and strategies.

This guide provides recommended benchmarking metrics for measuring residential program performance.

This guide describes a structure and several model approaches for calculating energy, demand, and emissions savings resulting from energy efficiency programs that are implemented by cities, states, utilities, companies, and similar entities.

The Uniform Methods Project: Methods for Determining Energy Efficiency Savings for Specific Measures

This report provides a set of model protocols for determining energy and demand savings that result from specific energy efficiency measures or programs. The methods described are among the most commonly used approaches in the energy efficiency industry for certain measures or programs; they draw from the existing body of research and best practices for energy efficiency evaluation, measurement, and verification (EM&V).

EnergySmart Colorado uses surveys and a customer database to get feedback from homeowners that helps fine-tune program services and operations.