Description

Conducting an evaluation requires that you work with a number of different parties, both internal and external, to ensure that:

- You and your evaluator(s) maintain a shared vision of the goals of the evaluation, and how you will get there

- Data transfers happen in a timely fashion

- Stakeholders are engaged in the act of conducting evaluations and are given an opportunity to provide input on scope changes and interim deliverables, so they are the more likely to find the results credible and act on the recommendations.

This handbook describes the steps necessary and resources available for successful third-party evaluations, including overseeing evaluation activities, reviewing evaluation deliverables, identifying and managing potential risks and evaluation scope changes, and communicating progress.

Conducting an evaluation builds on the preparations you made before this stage. Reference the Evaluation & Data Collection – Develop Evaluations Plans handbook for guidance on identifying the right questions to ask, appropriate metrics to collect, and the processes needed to initiate third-party impact and process evaluations and see the Evaluation & Data Collection - Develop Resources handbook for information on how to identify and implement data collection systems and tools for an effective evaluation.

This handbook discusses the steps you should take to manage third-party impact and process evaluation activities. These steps include:

- Oversee evaluation activities

- Review evaluation deliverables

- Identify and mitigate potential risks

- Adjust scope and timeline to accommodate evaluation changes

- Communicate progress throughout the evaluation process.

Step by Step

Below are the critical steps for conducting an evaluation.

As well as monitoring the progress of the evaluation and whether staff and the evaluation team are performing as agreed upon during contract negotiations, you will need to take several steps to oversee evaluation activities once they begin:

- Review each program component’s evaluation plan, including the key data, metrics, and measurement strategies, and ensure that the evaluation team implements it.

- Host an initial meeting with the evaluation team. This should be in-person and should include at least the evaluator’s project lead and your team’s key contacts for the evaluation process. Use this meeting to make any necessary clarifications to the scope of work and timeline, as well as roles and responsibilities of key team members. Sharing information about the program itself is an important part of this meeting because it provides valuable context and perspective to the project team.

- Schedule periodic check-in meetings with the evaluation team to answer questions or provide clarification on evaluation deliverables and ensure that the original plans and any subsequent changes are understood and appropriately implemented.

- Coordinate the transfer of information from staff and subcontractors to the evaluation team to ensure that staff and subcontractors are complying with the protocols and procedures agreed upon in the contract negotiation and final scope of work. As the effectiveness of the evaluation depends on the quality and timeliness of information from your team, this coordination will be necessary throughout the evaluation period.

- Arrange for the evaluation team to contact and interview a sample of your program’s contractors and customers. To be considerate of their time, ask contractors and customers if they are willing to be contacted about the program at a later date. Customers could be asked during the rebate or loan application process if they are willing to be contacted in the future. Be cognizant of how often contractors and customers are contacted for surveys or interviews, whether they are for quality assurance or an evaluation. If they are contacted too often to provide feedback, they may be less likely to participate.

Where to Find Evaluation Reports from Residential Energy Efficiency Programs

Reviewing evaluation reports from other residential energy efficiency programs can provide insights into the evaluation activities you should consider for your program. Here are some national and regional repositories of energy efficiency program evaluations:

- The Better Building Neighborhood Program’s Evaluation Report webpage provides links to grantee evaluation reports.

- The California Measurement Advisory Council (CALMAC) provides a searchable database of evaluation reports on energy efficiency programs in the state.

- The Northeast Energy Efficiency Partnerships (NEEP) EM&V Forum’s Repository of State EM&V Studies contains links to historical and recently released studies and evaluation reports from across the northeast.

- The Northwest Energy Efficiency Alliance (NEEA) completed several market research and evaluations reports, including reports for initiatives in the residential sector.

- The U.S. Energy Information Administration (EIA) State Energy Efficiency Program Evaluation Inventory provides a report and searchable spreadsheet of EM&V reports, including annual reports and impact and process evaluation reports.

Depending on the scope and scale of your evaluation, you may have several deliverables including interim and final reports. Take the time to review these to ensure that they meet the goals identified in your evaluation plan and provide the information expected by stakeholders.

You may need to distribute evaluation deliverables to key stakeholders for review. Reviewers should be identified and confirmed when you are developing your evaluation plans (refer to the Evaluation and Data Collection – Develop Evaluation Plans handbook.

- Provide reviewers with clear guidance on what to review, the review and evaluation schedule, and the process for providing feedback.

- Compile feedback for the evaluator to consider.

- Thank stakeholders and address any questions they raise with the evaluation team.

Identify issues, events, or other circumstances that could put your evaluation project at risk of not meeting milestones.

- Certain events can have a major impact on evaluations—for example, government rule changes, budget cuts, or significant market changes can affect your original plan to complete the evaluation. Accommodate these events by adjusting your original evaluation plan.

- While it is important to involve stakeholders in the early phases of scoping evaluations, their expectations can change, especially when there is turnover in stakeholder staff. Stay in routine contact with key stakeholders, so that you can identify and act on any changes in their expectations whenever possible.

Continually monitor for risks and consider how to mitigate any issues that arise. Depending on their nature, these issues may be simply addressed through proactive communication, or may require some level of scope change and/or contract renegotiation.

Given the many moving parts and potential risks discussed above, you must be sure to capture their effect on the evaluation—from minor adjustments to the schedule to more substantial changes that could require contract modifications.

- Adjust timelines as necessary to accommodate reality, including all parts of the evaluation scope of work that will be affected by a change to any one of them. Be sure to review your entire timeline so that any change to an element is reflected in all related elements. Gantt chart software can automatically reflect timetable changes in related timetables.

- If contract modifications are necessary, follow your organization’s contracting protocols to ensure that changes to scope, timeline, or budget are formalized and approved by both the contractor and your management.

Throughout the evaluation process, from kickoff to final report, it is critical to communicate progress to your staff, subcontractors, and stakeholders. As noted in previous steps, any changes to evaluation scope or timeline may impact the program resources you have identified to support evaluation activities. Sharing evaluation progress with program managers during the evaluation process allows program managers to learn about results on an ongoing basis and gives them an opportunity to ask questions. You should provide regular updates with information relevant to the audience, such as:

- Any schedule changes that impact the timing of interactions of key staff with evaluators.

- Any changes to scope or schedule that affect the type or schedule of deliverables and any necessary review from stakeholders.

- Any necessary framing of evaluation results so that they are well understood (see the Evaluation & Data Collection – Communicating Impacts handbook for more information).

- Interim report findings and recommendations that could guide process improvements (see the Program Design & Customer Experience – Assess and Improve Processes handbook for more information).

Tips for Success

In recent years, hundreds of communities have been working to promote home energy upgrades through programs such as the Better Buildings Neighborhood Program, Home Performance with ENERGY STAR, utility-sponsored programs, and others. The following tips present the top lessons these programs want to share related to this handbook. This list is not exhaustive.

Measuring performance at key points in the upgrade process (e.g., assessments, conversion rates, and financing applications) has helped programs understand where their processes are working smoothly and where they are not. This information has helped them continuously improve their program design and implementation. To monitor progress, successful programs have combined information from their project tracking systems with customer surveys, information from call centers, and feedback from contractors and lenders to understand the customer experience. Make data accessible for program staff to track progress, identify successful strategies, and detect points of failure.

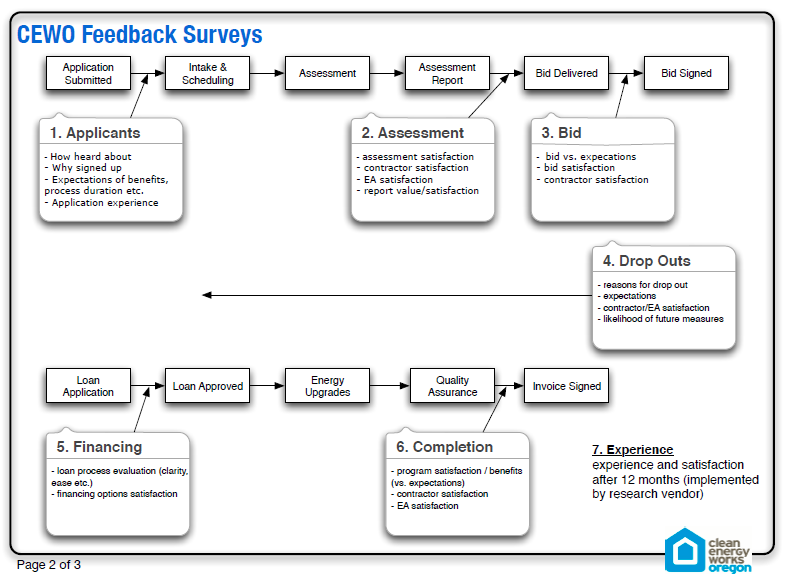

- Enhabit, formerly Clean Energy Works Oregon, established an extensive process for getting customer feedback at key points in the program delivery process to evaluate customer satisfaction and better understand why some homeowners chose to undertake upgrades while others did not. The program identified seven points in the program delivery process to gather information through feedback surveys and phone interviews: application, assessment, bid, drop-out, financing, completion, and experience after 12 months. The program credited this kind of customer communication and feedback as one of the keys to its ongoing success.

Source: Clean Energy Works Research Planning, Will Villota, CEWO, 2012 (Presented during January 19, 2012 Better Buildings Residential Neighborhood Program peer exchange call).

- Boulder County’s EnergySmart program sent an online customer feedback survey to homeowners who had completed upgrades. Among other things, the customer surveys affirmed customer satisfaction and identified the opportunity for word-of-mouth marketing. Surveys found that the vast majority of the respondents would recommend the EnergySmart service to a friend or neighbor. The surveys also surfaced some weaknesses that the program resolved. For example, some respondents noted contractor’s lack of response and professionalism as an issue, which led the program to develop guidelines for professionalism and customer contact. Surveys also noted that the assessment report was long and confusing, leading the program to develop a new, customized report that was easier to follow and clearer about next steps.

- Connecticut’s Neighbor to Neighbor Energy Challenge used qualitative contractor and customer feedback combined with quantitative data to evaluate how well its outreach efforts led to home energy assessments. When informal contractor feedback alerted program managers that relatively few interested customers were following through to have assessments conducted on their homes, the program analyzed project data and found that only around a quarter of customers who expressed interest in an assessment had completed one. To diagnose the problem, the program analyzed data to see how customers were acquired, how long it took to send leads to contractors, and how long it took contractors to follow up with customers to arrange for an assessment. Through qualitative analysis, the program found, among other things, that customers didn’t understand what they were signing up for and may have been unwilling to say “no” to young and enthusiastic outreach staff. The program also found that its staff wasn’t following up quickly enough with people that wanted more information. In response, the program improved its process for distributing leads to contractors (e.g., linking contractors to homeowners in 1-2 days), created a “receipt” for interested customers outlining next steps, and set up a system to call non-responsive leads after two weeks. With these and other steps, the program increased its close rate 35% in one month after changes were implemented.

Better Buildings Neighborhood Program partners found that conducting surveys of program participants that focus on tangible, easy-to-answer questions, such as the timeliness of service and the quality of work, resulted in better feedback. By including open-ended questions and questions about non-energy benefits, partners were able to garner a broader range of information and a better understanding of who their customers are and what they value (e.g., comfort, cost savings). Partners also found that administering customer surveys during or immediately following completion of the customer’s energy upgrade led to a higher rate of response.

- Enhabit, formerly known as Clean Energy Works Oregon, requests feedback from all customers during the upgrade process to help assess how contractors can improve their customer service. Quarterly customer surveys of participants who have completed assessments and upgrades include questions about customer satisfaction with the contractor’s work. This feedback enables the program to track what is working and what is not, and to respond with improvements quickly.

- Local Energy Alliance Program (LEAP) in Charlottesville, Virginia and Northern Virginia, dramatically modified its home energy upgrade process in response to homeowner feedback. Recognizing that many homeowners found a several thousand dollar investment challenging, LEAP implemented a “staged upgrade” process that allowed homeowners to implement home energy upgrades over a period of time, dividing the financial investment into smaller payments.

Many Better Buildings Neighborhood Program partners found that it was critically important to use compatible formats for data sharing and reporting with partners. Aligning data formats and collection plans with national data formats (e.g., Home Performance XML schema (HPXML), Standard Energy Efficiency Data platform (SEED), Building Energy Data Exchange (BEDES)) ensured compatibility for aggregation and reporting.

- For Arizona Public Service’s (APS) Home Performance with ENERGY STAR® Program, a lack of transparency and access to data meant it took hours each month to compile progress reports. Coordination with trade allies was difficult for similar reasons–both the utility and its contractors lacked visibility into project status and task assignment, as well as the ability to identify program bottlenecks, which impacted APS customer service. Program delivery metrics, from administrative overhead to customer and trade ally satisfaction, were lower than expected. APS then began the search for a more dynamic software platform to engage customers, track and manage projects, empower trade allies, and analyze and report results. The program needed HPXML, an open standard that enables different software tools to easily share home performance data. The new HPXML-compliant platform, EnergySavvy’s Optix Manage, resulted in higher cost effectiveness and greater satisfaction for the program, including 50% less administrative time to review and approve projects, a 66% reduction in data processing time for APS reporting, 31% less contractor administrative time to submit projects, and a three-fold increase in trade ally satisfaction. HPXML also had the added benefit that contractors can choose their own modeling software.

- The New York State Energy Research & Development Authority (NYSERDA) heard from home performance contractors and other stakeholders that a more streamlined data collection process was needed to reduce the paperwork burden and time spent on a per project basis. In response, the program launched the NY Home Performance Portal in July 2013. This web-based interface made it easier for customers to choose and apply for the home performance program and made the application process for a home energy assessment clear, fast, and simple. In 2015, NYSERDA further refined their data collection process and began processing of all projects in a web-enabled interface designed to facilitate program coordination. This new platform allowed NYSERDA to automate project approvals for 85-90% of projects. In addition, the platform supported HPXML which facilitates data sharing among multiple New York programs, thereby reducing the administrative burden for contractors participating in multiple programs. It allowed NYSERDA to automate the work scope approval process through validation of standardized data. An additional benefit of HPXML for NYSERDA was creating an open modeling software market.

- Massachusetts Department of Energy Resources (MassDOER) provides statewide oversight to energy efficient programs administered by utilities under the Mass Save brand. Originally, contractors from Conservation Services Group, Inc. and Honeywell International Inc. used audit software customized for the program in their home energy assessments. When MassSave piloted the Home MPG program, contractors were also required to generate an Energy Performance Scorecard for each home. The existing audit software, however, did not have this capability. To address this problem, software developers added the Energy Performance Scorecard capability, so the contractors could use the same software to record the audit results and generate the energy performance scorecard. Despite implementation delays, this solution allows the use of the Energy Performance Scorecards to potentially expand to statewide.

Though potentially challenging, establishing relationships for sharing energy consumption data is critical for evaluating program impact on energy and cost savings. Many Better Buildings Neighborhood Program partners found success by approaching utilities during the program planning phase, or at least several months in advance of when they planned to start collecting data, to outline shared goals, assets, tools, needs and constraints. Clear and concise data requests helped speed up utilities’ response times for providing the data and alleviated utility concerns and questions regarding data needs.

- Energize Phoenix formed a partnership with the local electric utility, Arizona Public Service (APS), while designing the program and coordinated with them throughout program development. Energize Phoenix found that understanding Arizona Public Service’s concerns and challenges related to data sharing was a key ingredient in forging a successful partnership, as was instituting a formal agreement to clarify roles and responsibilities.

- Southeast Energy Efficiency Alliance (SEEA) found that not all of their programs were successful in obtaining utility bill data. Common obstacles included that the utility did not have the technology infrastructure to easily export the information, would only release data for a fee (based on how many records were pulled), or simply did not have the time or resources to provide the information even if the program had a signed client release form from the homeowner. Among SEEA's programs, those that were most successful in obtaining utility billing information–including NOLA WISE in New Orleans, Louisiana; Local Energy Alliance Program (LEAP) in Charlottesville, Virginia; Atlanta SHINE in Atlanta, Georgia; and DecaturWISE in Decatur, Georgia - consulted with the utilities to determine what information the program needed to include in the client release form. Additionally, some programs developed a written memorandum of understanding with the utility specifying data collection and transfer roles and responsibilities. SEEA programs also found it best to make data requests to utilities on a quarterly basis to minimize the burden on the utility as many utilities do not have staff dedicated to data exporting. Some programs received data more frequently, but in these situations the utility had the means to easily pull and export data.

- When local utilities Philadelphia Gas Works (PGW) and Philadelphia Electric Company (PECO) shared customers’ energy usage data with EnergyWorks, all parties made sure that the proper data sharing requirements were observed and all parties signed the necessary forms. Philadelphia EnergyWorks built its customer data release approval language into the program’s loan application form to minimize the number of additional forms that a customer or contractor would need to handle.

- EnergySmart in Eagle County, Colorado, successfully developed partnerships with utilities during and after the Better Buildings Neighborhood Program grant period, but in hindsight found it would have been more beneficial to engage utilities prior to submitting the original DOE grant application. By not fully engaging utilities up front, EnergySmart created the following environment where the utilities are only partially included in the program and retained similar or redundant in-house services. As EnergySmart continued forward, they were able to gain the trust of the utility by offering help, data, and information. EnergySmart also shared their results with the utility’s management and board of directors. Through this gained trust, utilities were more willing to share data.

Resources

The following resources provide topical information related to this handbook. The resources include a variety of information ranging from case studies and examples to presentations and webcasts. The U.S. Department of Energy does not endorse these materials.

Homeowner data collection survey created by RePower.

This data intake template spreadsheet provides a way to track home energy performance metrics.

The Connecticut Neighbor to Neighbor Energy Challenge developed this form for authorization to obtain household energy information.

Survey for Minnesota home owners participating in Community Energy Services pilot program about their experience at their home visit.

Survey for consultants participating in Green Madison and Me2 programs about their experiences with the programs.

Questionnaire for contractors participating in the Green Madison program about their overall experience, level of participation, training, and available resources.

Participant survey sent to Me2 customers that have completed at least the initial Energy Advocate visit.

Survey for people who signed up to participate in the Me2 program for home performance assessments, but ultimately decided not to participate. The goal of the survey is to help improve services for future participants.

This technical reference manual provides detailed, comprehensive documentation of resource and non-resource savings corresponding to the Energy Efficiency Fund program and individual Conservation and Load Management (C&LM) program technologies.

This report is an update of findings from three prior evaluations of Eversource's Home Energy Reports (HERs) Pilot Program. The HERs pilot program began in January 2011. The pilot program randomly selected residential customers to whom it sent reports rating their energy use, comparing it to that of their neighbors, and suggested ways for the households to save energy.

This report includes a billing analysis, process evaluation, and baseline study for the Connecticut Residential New Construction (RNC) program. It also includes the results of the process evaluation.

This report for the Connecticut Energy Efficiency Board provides a review of best practices in impact evaluation, recommendations for calculating oil and propane savings, and discusses the impact evaluation findings for the Home Energy Services (HES) and Home Energy Services-Income Eligible (HES-IE) Programs. This best practices review provides an overview of key evaluation protocol and guideline documents.

This report for the Connecticut Energy Efficiency Board documents the difficulties that evaluators and programs in Connecticut faced in conducting evaluation studies and makes recommendations for improving data quality and consistency.

This report is the process evaluation of the Connecticut Multifamily (MF) Initiative, which leveraged the state's Home Energy Solutions (HES) and Home Energy Solutions-Income Eligible (HES-IE) programs. The objective of this process evaluation is to provide actionable recommendations about how to improve the design, delivery, and administration of the MF Initiative.

This evaluation plan for the New Hampshire Public Utilities Commission includes a market assessment framework which includes indicators of progress toward market transformation; evaluation recommendations for the 2015-2016 program implementation period; and a 6-year evaluation plan for each program or customer sector.

This evaluation and research plan sets out a proposed process for establishing and executing a detailed evaluation and research plan for New Jersey's Clean Energy Program.

This report summarizes the impact analyses of National Grid's and Eversource Energy's Home Energy Report (HER) programs. The evaluation team conducted three distinct impact analyses related to these HER programs: Cohort-Specific Impact Analysis; Mapping Analysis; and Dual Treatment Analysis.

This report identifies opportunities for Connecticut's Home Energy Solutions program (HES) to increase savings related to air sealing, duct sealing, and insulation.

This report presents the process evaluation results on the statewide Home Upgrade Program and includes findings on program operations, participant engagement, non-energy impacts, contractor characteristics, and contractor-customer interactions.

The study involved on-site visits to 180 single-family homes across Connecticut. The team assessed compliance with the weatherization standard using both the prescriptive and performance paths and made recommendations to improve program quality.

This study examined consumer awareness and opinions concerning the New Jersey Clean Energy Program (NJCEP). Research areas included New Jersey homeowners' awareness of NJCEP, an assessment of attitudes towards energy efficiency, the main benefits associated with energy efficiency, key drivers associated with purchasing energy efficient products, the best methods for increasing consumers awareness of NJCEP, and obstacles to implementing energy efficiency measures in the home.

This Multifamily Technical Reference Manuals (TRMs) provide documentation for the Trust's calculation of energy and demand savings from energy efficiency measures.

This report presents the results from a comprehensive impact and process evaluation of Efficiency Maine's Low-Income Multifamily Weatherization Program.

This study identifies actionable strategies and innovations to improve the multifamily program performance, realization rates, and overall program cost-effectiveness for both the residential and commercial sectors.

This report summarizes the impact analyses of National Grid's and Eversource Energy's Home Energy Report (HER) programs. The evaluation team conducted three distinct impact analyses related to these HER programs: Cohort-Specific Impact Analysis; Mapping Analysis; and Dual Treatment Analysis.

This report presents the results of the first‐year process and impact evaluation of Berkshire Gas' Home Energy Report (HER) program. The primary objective of the program is to provide residential households with information on their gas consumption and tips on how to save energy to prompt them to take action to reduce their natural gas usage.

This report includes evaluation analysis and findings from the Eversource New Hampshire Home Energy Report pilot program.

This memo provides a review of the New Jersey Comfort Partners Energy Saving Protocols, recommends changes to the calculations and additional calculation protocols for measures not included, and calculates engineering estimates for those proposed energy savings formulas.

This report presents the results of the evaluation of National Grid Rhode Island's 2014 EnergyWise program. EnergyWise is designed to achieve energy savings in single family (1-4 unit) residential homes by directly installing efficient lightbulbs and water heating measures, providing devices for homeowner use, and offering building shell retrofit rebates.

This report provides the electric and natural gas impacts from the suite of National Grid Multifamily Retrofit Programs as determined through a billing analysis.

This report documents findings and recommendations from an impact evaluation of the California Energy Commission’s California Comprehensive Residential Retrofit program, a statewide energy upgrade program funded by the American Recovery and Reinvestment Act of 2009. The program funded local and regional subrecipients to develop and test initiatives aimed at transforming the residential energy upgrade market and building an infrastructure for whole-building energy upgrades. These local and regional governments collaborated with California’s major utilities to jointly conduct the statewide Energy Upgrade California program.

The Multi-State Residential Retrofit Project is a residential energy-efficiency pilot program, funded by a competitive U.S. State Energy Program (SEP) award through the U.S. Department of Energy. The Multi-State Project operates in four states: Alabama, Massachusetts, Virginia, and Washington. During the course of this three-year process evaluation, Cadmus worked closely with NASEO and the four states to collect information about the programs from many perspectives, including: State Energy Office staff, program implementers, homeowners, auditors/contractors, real estate professionals, appraisers, lenders, and utility staff. This report discusses: the project’s context; its goals; the evaluation approach and methods; cross-cutting evaluation results; and results specific to each of the four states.

This webinar series is intended for state officials starting or expanding their EM&V methods for a wide range of efficiency activities including utility customer-funded programs, building energy codes, appliance and equipment standards, energy savings performance contracting, and efficiency programs that support pollution reduction goals or regulations.

This presentation describes how programs have leveraged data to increase program energy savings, with a spotlight on advanced and real-time monitoring and verification (M&V 2.0), contractor scorecards, and intelligent quality assurance (QA) and monitoring.

This presentation covers the current pilot project testing M&V2.0 as an evaluation tool facilitated by Connecticut Department of Energy and Environmental Protection (CT DEEP). Speakers on this panel presented examples of how whole building modeling is currently being used for M&V now and its potential future applications. Speakers also discussed benchmarking, data access and other protocols, and how experience with efficiency programs teach us so we can build upon the current experience.

The Best Practices Self-Benchmarking Tool can be used to identify in your own programs their strengths, areas of improvement needed, and strategies for improving them, based on the results of the Best Practices Study.

The Energy Data Accelerator Toolkit is a collection of resources featured in the Better Buildings Solution Center that will enable other utilities and communities to learn and benefit from the work of the Accelerator. It describes the best practices that enabled cities, utilities, and other stakeholders to overcome whole-building data access barriers.

This toolkit is a comprehensive guide to utility benchmarking for the multifamily sector. Benchmarking 101 describes the benefits of tracking utility data and explains how to begin the process. Utility Benchmarking Step-by-Step outlines a six-step approach to utility benchmarking. Policies and Programs summarizes utility benchmarking requirements for HUD programs, opportunities for financial assistance, and HUD programs that support green retrofits.

List of building energy software packages, some of which are available for free or a small fee.

The State and Local Energy Efficiency Action Network (SEE Action) Evaluation, Measurement, and Verification (EM&V) Resource Portal serves as an EM&V resource one-stop shop for energy efficiency program administrators and project managers. The resources focus on tools and approaches that can be applied nationwide, address EM&V consistency, and are recognized by the industry.

The Building Energy Data Exchange Specification (BEDES, pronounced "beads" or /bi:ds/) is designed to support analysis of the measured energy performance of commercial, multifamily, and residential buildings, by providing a common data format, definitions, and an exchange protocol for building characteristics, efficiency measures, and energy use.

Home performance extensible markup language (HPXML) is a national Building Performance Institute Data Dictionary and Standard Transfer Protocol created to reduce transactional costs associated with exchanging information between market actors. This website provides resources to help stakeholders implement HPXML and stay updated on its development.

The Standard Energy Efficiency Data (SEED)™ Platform is a software application that helps organizations easily manage data on the energy performance of large groups of buildings. Users can combine data from multiple sources, clean and validate it, and share the information with others. The software application provides an easy, flexible, and cost-effective method to improve the quality and availability of data to help demonstrate the economic and environmental benefits of energy efficiency, to implement programs, and to target investment activity.

EM&V Basics, Tools and Resources to Assist EECBG and SEP Grantees

This webinar offers an introduction to EM&V basics, including data collection, tracking tools, M&V approaches, and reporting energy savings.

Energy Efficiency and Conservation Loan Program Webinar Series: #1 Overview and Cost Effectiveness

This webinar is the first (in a series of six) hosted by USDA Rural Utility Service (RUS) and focusing on the Energy Efficiency and Conservation Loan Program (EECLP). This webinar provides an overview of the Energy Efficiency and Conservation Loan Program. It covers the requirements and benefits of the program and also discusses steps you can take to evaluate the cost effectiveness of energy program options.

Energy Efficiency and Conservation Loan Program Webinar Series: #2 Evaluation, Monitoring & Verification

This webinar is the second (in a series of six) hosted by USDA Rural Utility Service (RUS) and focusing on the Energy Efficiency and Conservation Loan Program (EECLP). This webinar covers the key concepts of Evaluation, Monitoring & Verification (EM&V), gives an overview of the full process, from estimating savings before programs are implemented to measuring and verifying the savings at the end. The webinar also covers EM&V framework, evaluation plans, technical reference manuals and measurement and verification studies.

Energy Efficiency and Conservation Loan Program Webinar Series: #4 Residential Energy Efficiency Deep Dive, Part Two

This webinar is the fourth (in a series of six) hosted by USDA Rural Utility Service (RUS) and focusing on the Energy Efficiency and Conservation Loan Program (EECLP). The second in a two-part series, this webinar shares best practices from the more than 40 competitively selected state and local governments who participated in the U.S. Department of Energy’s Better Buildings Neighborhood Program. This webinar focuses on data collection and continuous improvement, partnering with financial institutions, community-based outreach, and quality assurance of contractor work. It also features a case study from Jackson Electric Member Corporation about their audit tools, rebates and loans, tracking and reporting, and marketing and advertising strategies.

Volume 1 of the Better Buildings Neighborhood Program Evaluation Report provides findings from a comprehensive impact, process, and market effects evaluation of the program period, spanning from September 2010 through August 2013.

Volume 2 of the Better Buildings Neighborhood Program Evaluation Report comprises a measurement and verification process, as well as billing regression analysis on projects with sufficient utility bill data, to determine gross verified savings.

Volume 3 of the Better Buildings Neighborhood Program Evaluation Report statistically identifies factors associated with successful residential energy upgrade programs using a survey sampling, cluster analysis, and multivariate regression approach.

Volume 4 of the Better Buildings Neighborhood Program Evaluation Report assesses the degree to which the Better Buildings Neighborhood Program met its process goals and objectives to identify the most effective program design and implementation approaches.

Volume 5 of the Better Buildings Neighborhood Program Evaluation Report provides findings from a comprehensive impact, process, and market effects evaluation of the program period, spanning from September 2010 through August 2013.

This resource provides best practices and highlights case studies for how utilities, policymakers, building managers, and community stakeholders can improve access to energy usage data while working towards the goal of improving efficiency in their communities.

This guide supports the development, maintenance, and use of accurate and reliable Technical Reference Manuals (TRMs). TRMs provide information to estimate the energy and demand savings of end-use energy efficiency measures associated with utility customer-funded efficiency programs. This guide describes existing TRMs in the United States and provides recommendations for TRM best practices. It also offers related background information on energy efficiency; evaluation, measurement, and verification; and TRM basics.

This report looks into residential lighting savings assumptions found in Technical Reference Manuals (TRMs) throughout the Northeast and Mid-Atlantic regions to understand what values were being used for key metrics such as hours of use, delta watt, and measure life. It provides the opportunity to view completed Standardized Methods Forms to compare evaluation methodology and results.

This guidance document provides background and instructions for program administrators to use the data collected by smart thermostats to calculate energy savings for a program.

New advanced Information and Communications Technologies (ICT) are pouring into the marketplace and are stimulating new thinking and a shift in the energy efficiency EM&V paradigm. These emerging technologies, including advanced data collection and analytic tools, are purported to provide timely analytics on program results and efficacy. This report reviews how new data analytic tools serve to help identify savings opportunities and engaging customers in programs like never before, and explores the potential for advanced data collection (e.g. AMI, smart meters) and data analytics to improve and streamline the evaluation process.

The Uniform Methods Project: Methods for Determining Energy Efficiency Savings for Specific Measures

This report provides a set of model protocols for determining energy and demand savings that result from specific energy efficiency measures or programs. The methods described are among the most commonly used approaches in the energy efficiency industry for certain measures or programs; they draw from the existing body of research and best practices for energy efficiency evaluation, measurement, and verification (EM&V).

This report presents an analysis of data for residential single-family projects reported by 37 organizations that were awarded federal financial assistance (cooperative agreements or grants) by the U.S. Department of Energy’s Better Buildings Neighborhood Program. The report characterizes the energy-efficiency measures installed for single-family residential projects and analyzes energy savings and savings prediction accuracy for measures installed in a subset of those projects.

Among the many benefits ascribed to energy efficiency is the fact that it can help create jobs. Although this is often used to motivate investments in efficiency programs, verifying job creation benefits is more complicated than it might seem at first. This paper identifies some of the issues that contribute to a lack of consistency in attempts to verify efficiency-related job creation. It then proposes an analytically rigorous and tractable framework for program evaluators to use in future assessments.